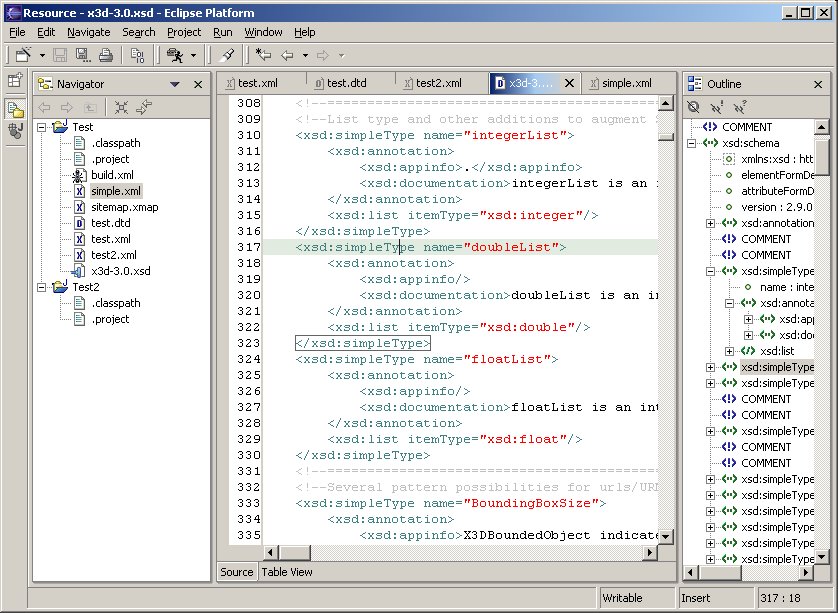

#XML TOOLS WIKIPEDIA CODE#

This code makes use of the BeautifulSoup library for parsing HTML. import requests # Library for parsing HTML from bs4 import BeautifulSoup base_url = ' ' index = requests.get(base_url).text soup_index = BeautifulSoup(index, 'html.parser') # Find the links on the page dumps = for a in soup_index.find_all('a') if a.has_attr('href')] dumps We view the available versions of the database using the following code.

(A dump refers to a periodic snapshot of a database). Instead, we can access a dump of all of Wikipedia through Wikimedia at. The first step in any data science project is accessing your data! While we could make individual requests to Wikipedia pages and scrape the results, we’d quickly run into rate limits and unnecessarily tax Wikipedia’s servers. The book is well worth it and you can access the Jupyter Notebooks at no cost on GitHub.įinding and Downloading Data Programmatically This project was inspired by the excellent Deep Learning Cookbook by Douwe Osinga and much of the code is adapted from the book. The notebook containing the Python code for this article is available on GitHub. If you’d like to see more about utilizing the data in this article, I wrote a post using neural network embeddings to build a book recommendation system. The techniques covered here and presented in the accompanying Jupyter Notebook will let you efficiently work with any articles on Wikipedia and can be extended to other sources of web data. The original impetus for this project was to collect information on every single book on Wikipedia, but I soon realized the solutions involved were more broadly applicable.

#XML TOOLS WIKIPEDIA DOWNLOAD#

However, size is not an issue with the right tools, and in this article, we’ll walk through how we can programmatically download and parse through all of the English language Wikipedia.Īlong the way, we’ll cover a number of useful topics in data science: The size of Wikipedia makes it both the world’s largest encyclopedia and slightly intimidating to work with.

#XML TOOLS WIKIPEDIA FOR FREE#

Who would have thought that in just a few years, anonymous contributors working for free could create the greatest source of online knowledge the world has ever seen? Not only is Wikipedia the best place to get information for writing your college papers, but it’s also an extremely rich source of data that can fuel numerous data science projects from natural language processing to supervised machine learning. Wikipedia is one of modern humanity’s most impressive creations.

0 kommentar(er)

0 kommentar(er)